~ 11 min read

Unleashing the Power of Vector Databases: A Hands-On Guide with Supabase and PostgreSQL

In the digital era, where data is more abundant than ever, the need to sift through this sea of information becomes a vital skill. While traditional databases serve the purpose of data retrieval based on exact matches, vector databases open up a new horizon for similarity-based queries. This guide will walk you through building your own vector database using Supabase and PostgreSQL, offering you a pathway to cutting-edge data management and retrieval solutions. Here’s a glimpse into how vector databases can revolutionize various domains:

- Semantic Search: Uncover related content in your knowledge base through mathematical modeling.

- Chatbots: Build chatbots that remember and learn from previous interactions.

- Hybrid Search: Merge the precision of semantic search with the flexibility of SQL filtering.

- Image Similarity: Transform images into vectors to detect patterns and similarities.

- Data Management: Streamline your data with automated tagging, deduplication, and pattern detection.

- Recommendations: Craft personalized recommendations for users, from articles and videos to restaurants and more.

One way to tap into this potential is through the PostgreSQL pgvector extension. When integrated with Supabase, it allows you to quickly build your own backend with a vector database from scratch, opening doors to a world of opportunities for data management and retrieval. We will use these tools to show you how easily you can implement such a database by yourself. Let’s dive into the details of how a vector database works and how to implement it!

Understanding Vector Databases

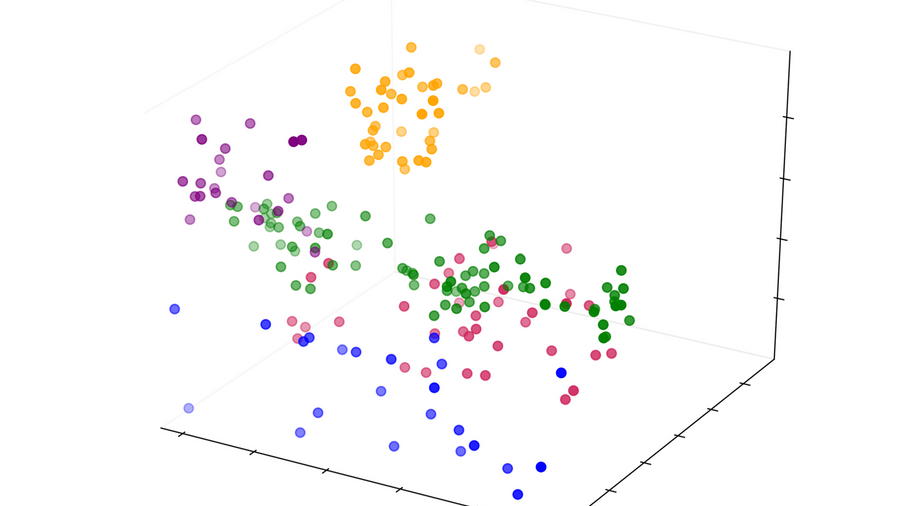

A vector database is purpose-built to store and manage vector data, unlocking novel ways to retrieve information based on similarity rather than exact matches. By storing vectors – mathematical representations of data – these databases enable efficient similarity comparisons. In essence, almost anything can be represented as a mathematical vector in some form. these vectors are also called embeddings. With this approach the most similar items to the query to the database can then be returned. The advantage to other databases is, that we just need to ask about similar items, without having to know exact information about a certain item.

An embedding is a compact numerical representation of data. It transforms complex information, such as text, images, or other types of data, into a fixed-size vector. This vector captures essential characteristics or features of the original data in a way that enables efficient processing and similarity comparison.

To find desired elements in a vector database, we can use similarity search of vectors, where we find the vectors closest to a given query vector according to a specific distance metric (such as cosine similarity or Euclidean distance). Vector databases are optimized to efficiently handle these types of queries, enabling fast and scalable search operations.

Process

Let’s dissect the process:

- Initially, the embedding model is employed to generate vector embeddings for the content that needs to be indexed.

- This vector embedding is then placed into the vector database, linking it to the original content from which the embedding was derived.

- When a query is made by the application, the same embedding model is utilized to create embeddings for that query. These newly created embeddings are then used to search the database for vector embeddings that are similar. As previously noted, these similar embeddings correspond to the original content that was used in their creation.

Indexing

Vector databases, like pgvector often support two types of indices:

Exact Nearest Neighbor Search: This method guarantees accuracy by finding the exact closest data point to a query. It’s great when accuracy is super important, but it can take more time, especially for big or high-dimensional datasets.

Approximate Nearest Neighbor Search: ANN Search focuses on speed. It quickly finds an almost-nearest neighbor, which is handy when getting quick responses matters most, and small differences from the true nearest neighbor are okay.

Which one to use depends on your needs:

- Use Exact Nearest Neighbor Search when you really need accuracy, even if it takes a bit longer.

- Go for Approximate Nearest Neighbor Search when you want fast answers and don’t mind if they’re a bit less precise.

Ivfflat an ANN Index

The ivfflat index in pgvector, which we need later organizes and accelerates similarity searches using a two-step process:

-

Space Division: The algorithm applies k-means clustering to create cluster centroids from the data. Each vector is then assigned to the nearest centroid, dividing the space into regions around these centroids. This helps group similar vectors and reduces the search scope.

-

Index Creation and Search: ivfflat builds an inverted index, associating each centroid with a set of vectors in its region. During search, the algorithm estimates that the nearest neighbors of a query vector are within the same region as the query. It calculates distances from the query to each centroid, then searches the region of the closest centroid for approximate nearest neighbors.

To enhance accuracy, pgvector’s ivfflat allows adjusting two parameters:

-

Lists Parameter: Controls the number of clusters and their region sizes during index creation. Higher values increase query speed but might introduce more recall errors by excluding some points.

-

Probes Parameter: Affects the number of regions searched during a query. More probes enhance recall at the expense of query speed. A recommended value is .

Vector Similarity

Vector similarity refers to a measure of how closely two vectors are related. It is often calculated. We use vector similarity to find the most similar items in a database. For this approach we use mathematical models like cosine similarity or dot product similarity.

- Cosine Similarity: Measures the cosine of the angle between two vectors in a vector space. It ranges from -1 to 1, representing identical vectors (1), orthogonal vectors (0), and diametrically opposed vectors (-1). Given two vectors ( A ) and ( B ), the cosine similarity is calculated as:

- Dot Product Similarity: Measures the straight-line distance between two vectors in a vector space. It ranges from 0 to infinity, where 0 signifies identical vectors, and larger values indicate greater dissimilarity. The dot product of two vectors and is:

- Euclidean Distance Similarity: Calculates the product of the magnitudes of two vectors and the cosine of the angle between them. It ranges from to , with positive values denoting vectors pointing in the same direction, representing orthogonal vectors, and negative values indicating vectors pointing in opposite directions. The inverse of the Euclidean distance between two vectors and is computed as:

Here, and represent the components of vectors and in the th dimension. The resulting value falls between and .

When choosing between cosine similarity, dot product similarity, and Euclidean distance similarity, consider the following scenarios:

Cosine Similarity is suitable when:

- Directional Similarity Matters: If you want to emphasize the similarity in orientation between vectors while disregarding their magnitudes, cosine similarity is appropriate. This is useful when focusing on the relative alignment of features rather than their intensities.

- Normalized Vectors: When your vectors are already normalized, using cosine similarity ensures that the magnitudes don’t influence the similarity calculation. This is ideal for scenarios where the length of vectors is less important.

Dot Product Similarity is preferable when:

- Magnitude and Direction Are Important: If both the magnitudes and orientations of vectors are crucial for your analysis, dot product similarity captures this combined information effectively. This is beneficial when the strength and direction of features play a significant role.

- Dimensionality Reduction Results: After applying dimensionality reduction techniques like LSA or PCA, where vectors may not be unit-length, dot product similarity can accurately measure similarity between transformed vectors. It accommodates cases where cosine similarity might not apply directly.

- Efficiency: In cases where computational efficiency is important, dot product similarity can be faster to compute than cosine similarity due to involving fewer calculations, especially when dealing with normalized vectors.

Euclidean Distance Similarity is appropriate when:

- Geometric Proximity Matters: If you’re interested in measuring similarity based on the geometric distance between vectors’ components, Euclidean distance similarity is a suitable choice. It’s valuable when considering how closely vectors are positioned in the multi-dimensional space.

- Inverse Relationship with Distance: Euclidean distance similarity assigns higher values to closer vectors, indicating strong similarity. This can be advantageous when focusing on how near or far vectors are in terms of their feature values.

Basic rule of thumb when choosing the most appropriate similarity metric to index your vector database, is to match it with the metric utilized during the training of your embedding model.

Implementation in Supabase with Postgress and pgvector

Discover how Supabase supercharges PostgreSQL with pgvector—a specialized extension for storing vector embeddings and performing advanced similarity searches.

Step 1: Enable the pgvector Extension

Go to your Supabase dashboard, navigate to the database section, and enable the “vector” extension.

Step 2: Create a Table to Store Vector Embeddings

Create a table with a column specifically for vector embeddings. This can be done using SQL via the Supabase dashboard.

CREATE TABLE posts (

id SERIAL PRIMARY KEY,

title TEXT NOT NULL,

body TEXT NOT NULL,

embedding vector(384)

);Step 3: Storing Vector Data

To store vector data, you can generate embeddings using machine learning libraries or tools. In this example, we’ll use JavaScript and a embedding model provided by Supabase:

import { pipeline } from '@xenova/transformers';

import { createClient } from '@supabase/supabase-js';

const supabase = createClient('supabase_url', 'public_anon_key');

const generateEmbedding = await pipeline('feature-extraction', 'Supabase/gte-small');

const title = 'Supabase and pgvector!';

const body = 'A guide on implementing a vector database';

// Generate a vector

const output = await generateEmbedding(body, { pooling: 'mean', normalize: true });

const embedding = Array.from(output.data);

// Store the vector in Postgres via Supabase

const { data, error } = await supabase.from('posts').insert({ title, body, embedding });Using OpenAI’s Embedding Model (Optional)

If you prefer, you can also use an embedding model from OpenAI. you can replace the pipeline like this:

const generateEmbedding = await pipeline('feature-extraction', 'text-embedding-ada-002');Step 4: Implement Similarity Search

Once you have vector data stored in the database, the next step is to build a similarity search feature. This enables users to find posts or documentation that are conceptually related. The pgvector extension for PostgreSQL comes with three different types of similarity metrics that can be used for this purpose:

| Operator | Description |

|---|---|

<-> | Euclidean distance |

<#> | Negative inner product |

<=> | Cosine distance |

To execute a similarity search, you can employ SQL queries that make use of specific similarity metrics. In the example below, the < 0.5 constraint specifies that we’re looking for records where the Euclidean distance between the stored embedding and the query embedding is 0.5 or less:

SELECT * FROM posts WHERE embedding <-> array[...your_query_embedding_here...] < 0.5;Executing the SQL Query Through JavaScript

If you prefer to run this SQL query programmatically using JavaScript, here’s how you can do it:

import { createClient } from '@supabase/supabase-js'

// Initialize Supabase client

const supabaseUrl = 'https://your-project-url.supabase.co'

const supabaseKey = 'your-anon-key'

const supabase = createClient(supabaseUrl, supabaseKey)

// Define your query embedding

const queryEmbedding = [0.1, 0.2, 0.3, ...]

// Execute the SQL query using Supabase client

const { data, error } = await supabase

.from('posts')

.select('*')

.match(

'embedding',

`'<->'`,

`array[${queryEmbedding.map((value) => value.toString()).join(', ')}]`,

'<',

'0.5'

)This JavaScript code snippet demonstrates how to use the Supabase client to execute the SQL query, enabling you to find posts with similar embeddings based on a Euclidean distance of or less. This code can be easily implemented in any frontend, that need access to your vector database

Conclusion

As we step further into the age of data-driven solutions, the role of databases in managing and interpreting vast datasets continues to evolve. Vector databases, combined with tools like Supabase and PostgreSQL, are driving this transformation by making it easier than ever to perform advanced similarity-based searches. By implementing a vector database, you’re not just upgrading your data retrieval methods; you’re opening doors to more intelligent systems capable of semantic search, personalized recommendations, and so much more.

So, are you ready to step up your database game? Dive into the exciting world of vector databases and watch your data work smarter, not harder.